Know the concepts

Know some basic before you start.

This page contains some basic concepts that are going to be mentioned during the tutorial and codebase. For more information, please look at definition sections for quick reference manual.

How it all looks like

There is two side of the coin that performs different tasks, we will be refer as following:

- Simulation / Home side:

- Simulation / Home side contains a running environment, which in realtime generate new changes (also refers as notifications) to the program side

- Program / Algorithm side

- This side contains your running logic, include your codebase, and some form of server that accept new changes, and deal with it.

Faces of simulation / home side

The simulation / home side can take many forms, as a basic structure it contains a database to store information, some kind of mechanism to push notification to program side. As extra features that will be added in future releases, there will be management system with rich context display for both caregivers and developers, to see the states of smart home, remote debug and much more.

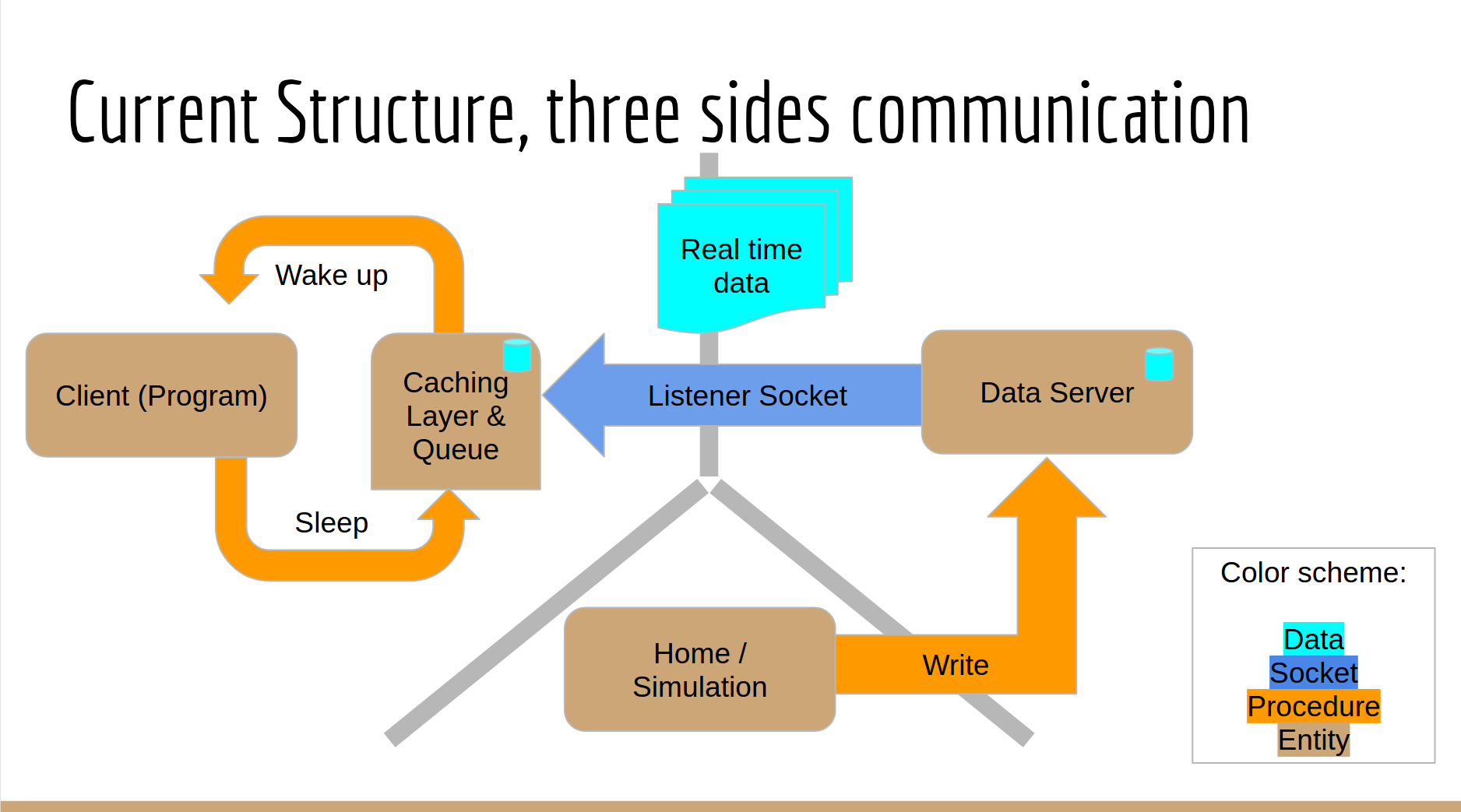

Be aware, that the home/simulation side and data server is together. This is a temporarily solution tradeoff the correctness of the structure to the flexibility of the program side. The data server can be detached from the home/simulation side. Here is a structure overview of the running environment:

About environment

Environment refers to a set of human, objects, and their states.

A environment can be either a simulation environment, or a real world environment. As 0.1 pre-alpha release, we will focus on simulation environment.

Different between environment and simulation

A question that is very often asked. The answer is very vague for current release. First of all, as we said, a environment is either a simulation or an actual running environment. The target for simulation is that it should have exact same behavior as running environment, so the developers can have no problem testing their algorithm. But this is not true for current release, as there are many gaps to be filled, like the time cost of human perform an action, a

For example, in a environment, we can have a chair, and a human called "Jack".

About physics

We reason on the object by its physical state by international physics standards.

A set of physics state is predefined for you for easy usage. Here is the list:

| Physics | Unit | Demension |

|---|---|---|

| speed | metre per second | 3 |

| luminance | candela | 1 |

| location | metre | 3 |

| rotation | rad | 3 |

| pressure | pascal | 1 |

| open | bool | 1 |

You are allow to use any of them freely, and add more if necessary.

Change/Add physical states

In pre-alpha release 0.1, since the physical state system is not robust, and each person use individual SQL DB, in order to fit for your purpose, you are allow to change physical state by modify _init_physics function in "_schema.py". In the future version we will migrate to public DB and only allow user use predefined physics.

About object and its states

Each object in environment can have several physical states. And the object state can be changed by an action issued by a human.

For example, a chair can have a pressure of 30 pascal; a door can have a open state of 1, e.g. true. A human can sit on the chair to change its pressure, or open/close door to change its open state.

Actions of human

Human have the ability to issue actions, which have impact (change of physical state) to objects.

Human in the environment

For simulation, you can add human into environment, and assign the human to do actions that impact the physical state of objects within the environment.

Some actions, how it impact the environment also depend on the human. For example, when a human sit on chair, the pressure change of the chair depend on the human weight. We introduce a variable called "human potential", "hp" for short in calculation. Each human can have several human potentials. Currently one action can only be impacted by one hp.

Human potential and its future

The human potential is designed to be much more than a factor to impact physical state. In the future releases, it will be used to track much more information, here is a taste of it:

_ Impact how human do actions (for example, when tired, will not do some actions).

_ Human sentiment, used for developer to calcuate optimal interactive pattern.

_ How human interact with machine (for example, in bad mood, use different word).

and much more...

About sensors, observation states, and its limitations

Remember, as a program reasoning on a smart home environment, you can not acquire any physical states of objects. Instead, you get sensor readings to reason with, this will be refer to "sensor readings", or "observation states of objects". Each sensor are allow to watch one object, and observes a set of physical states of the object.

The observation states is exactly like physical states of object, but might not be equal. This means it is part of your job, as a AI developer, to take the inconsistency into consideration.

Each sensor have a success rate, which is a value between 0 and 1, indicates a chance of the sensor report correct value. For example, a pressure sensor can have a rate of 0.9, indicates at 90% of time, if a human sit on it, will change the pressure state, otherwise the value will not change.

Sensor montoring and its future

In the future release, we will build a more complex sensor system:

_ Tracking various of conditions sensor can have, for example out of battery.

_ Automatically assign sensor success rate depend on sensor type and manufacturers.

_ Provides reasoning layer for sensor state, allow you to get sensor value and a predicted real value.

_ Taking the chain effect into considerations, instead of one step success / failure of sensor.

Updated over 8 years ago